Introduction

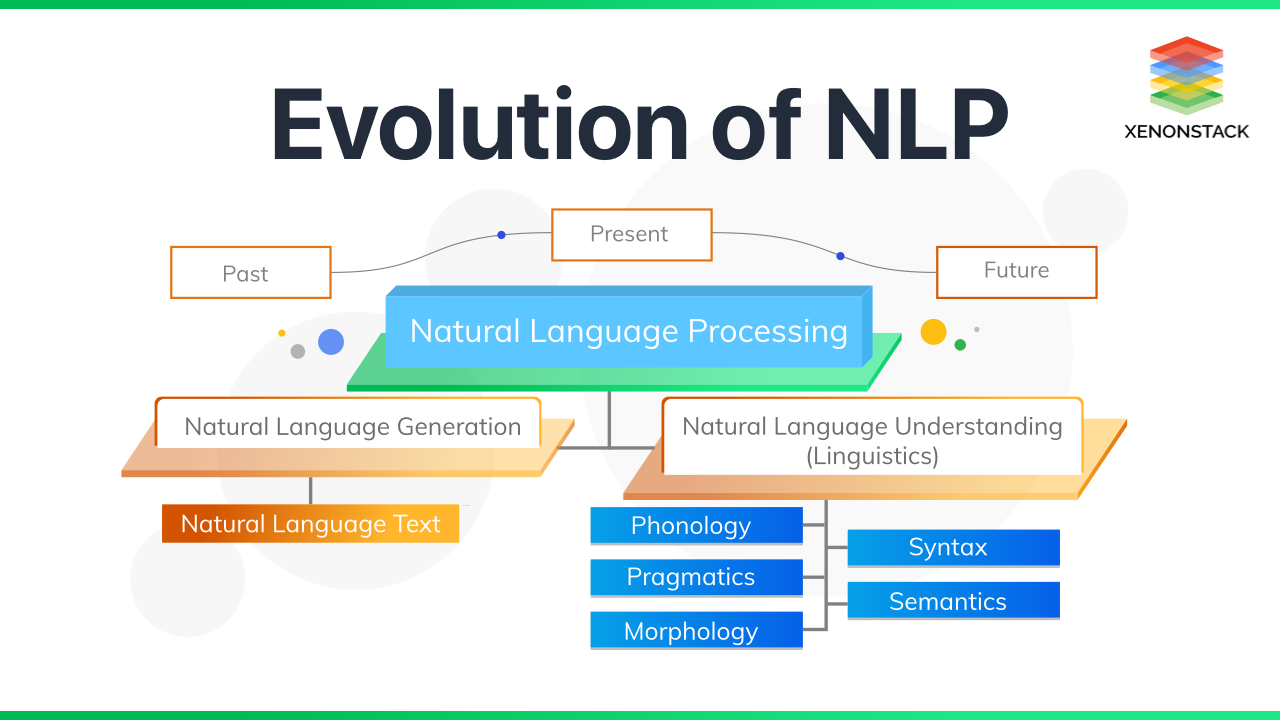

Natural Language Processing (NLP) is a field of artificial intelligence that focuses on the interaction between computers and human language. It involves the development of algorithms and models that enable computers to understand, interpret, and generate human language in a way that is both meaningful and contextually relevant.

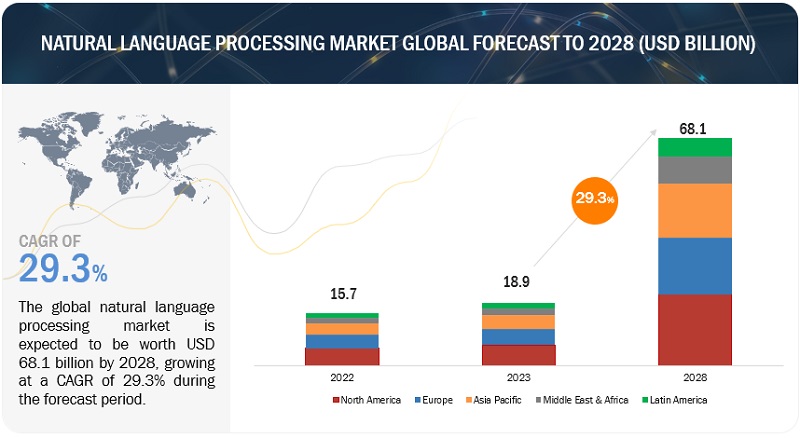

In recent years, there have been significant advancements in NLP, driven by the availability of large amounts of data, improvements in computational power, and breakthroughs in machine learning techniques. These developments have opened up new possibilities and applications for NLP, revolutionizing various industries and sectors.

In this blog post, we will explore some of the latest developments in NLP and discuss their implications. Whether you are a researcher, a developer, or simply curious about the future of language technology, this article will provide you with valuable insights into the exciting world of NLP.

1. Transformer Models

Transformer models, such as BERT (Bidirectional Encoder Representations from Transformers), have gained immense popularity in NLP. These models use attention mechanisms to capture the context of words in a sentence, allowing for more accurate language understanding. BERT, in particular, has shown remarkable performance in various NLP tasks, including question answering, sentiment analysis, and text classification.

2. Transfer Learning

Transfer learning has emerged as a powerful technique in NLP. It involves training a model on a large dataset and then fine-tuning it for specific tasks. This approach has significantly reduced the need for extensive labeled data, making it easier to develop NLP applications. Models like GPT-3 (Generative Pre-trained Transformer 3) have demonstrated the effectiveness of transfer learning by achieving state-of-the-art results in multiple language-related tasks.

3. Multilingual NLP

With the increasing demand for global communication, multilingual NLP has become a crucial area of research. Traditional NLP models were primarily designed for English, but recent developments have focused on supporting multiple languages. Multilingual models, such as XLM-RoBERTa, have been trained on vast amounts of multilingual data, enabling them to understand and generate text in various languages. This advancement has opened up new possibilities for cross-lingual applications and improved language understanding.

4. Contextual Word Embeddings

Word embeddings play a vital role in NLP tasks by representing words as dense vectors. Traditional word embeddings, like Word2Vec and GloVe, assign a fixed vector to each word, regardless of its context.

Summary

Natural Language Processing (NLP) has witnessed remarkable progress in recent years, thanks to advancements in data availability, computational power, and machine learning techniques. This blog post aims to shed light on some of the latest developments in NLP and their implications. By understanding these adva webpage ncements, readers can gain valuable insights into the future of language technology and its potential impact on various industries and sectors.

-

What is Natural Language Processing (NLP)?

Natural Language Processing (NLP) is a branch of artificial intelligence that focuses on the interaction between computers and human language. It involves the development of algorithms and models to enable computers to understand, interpret, and generate human language.

-

What are some recent advancements in Natural Language Processing?

Recent advancements in Natural Language Processing include the development of deep learning models such as recurrent neural networks (RNNs) and transformers, which have significantly improved the performance of various NLP tasks such as machine translation, sentiment analysis, and question answering.

-

How is Natural Language Processing used in real-world applications?

Natural Language Processing is used in various real-world applications such as virtual assistants (e.g., Siri, Alexa), chatbots, language translation services, sentiment analysis tools, and information extraction systems. It also plays a crucial role in analyzing and processing large amounts of textual data in fields like healthcare, finance, and social media.

-

What are the challenges in Natural Language Processing?

Some of the challenges in Natural Language Processing include dealing with ambiguity, understanding context, handling different languages and dialects, and accurately capturing the nuances of human language. Additionally, training NLP models requires large amounts of annotated data and significant computational resources.

-

What is the future of Natural Language Processing?

The future of Natural Language Processing looks promising. With ongoing research and advancements in deep learning, we can expect NLP models to become even more powerful and capable of understanding and generating human language with higher accuracy. NLP will continue to revolutionize various industries and enhance human-computer interaction.

Welcome to my website! My name is Timothy Woodruff, and I am a passionate and experienced IoT Solutions Architect. With a deep understanding of digital transformation, IoT innovations, hardware reviews, and AI updates, I am dedicated to helping businesses harness the power of technology to drive growth and efficiency.